Intro to Data Science

Lecture 5 – Multi-Model Inference

A Guide to Your Process

Scheduling

Learning Objectives

Practice

Supporting Information

Class Discussion

Today’s Plan

- Interactions

- Multi-Model Inference

- Mid-Term Instructor Evaluations

- Free Work on Function Tutorials

Today’s Learning Objectives

After today’s session you will be able to:

- Perform more analytical tests using R

- Explain an “interaction term” in the context of life sciences

- Compare model strengths in R

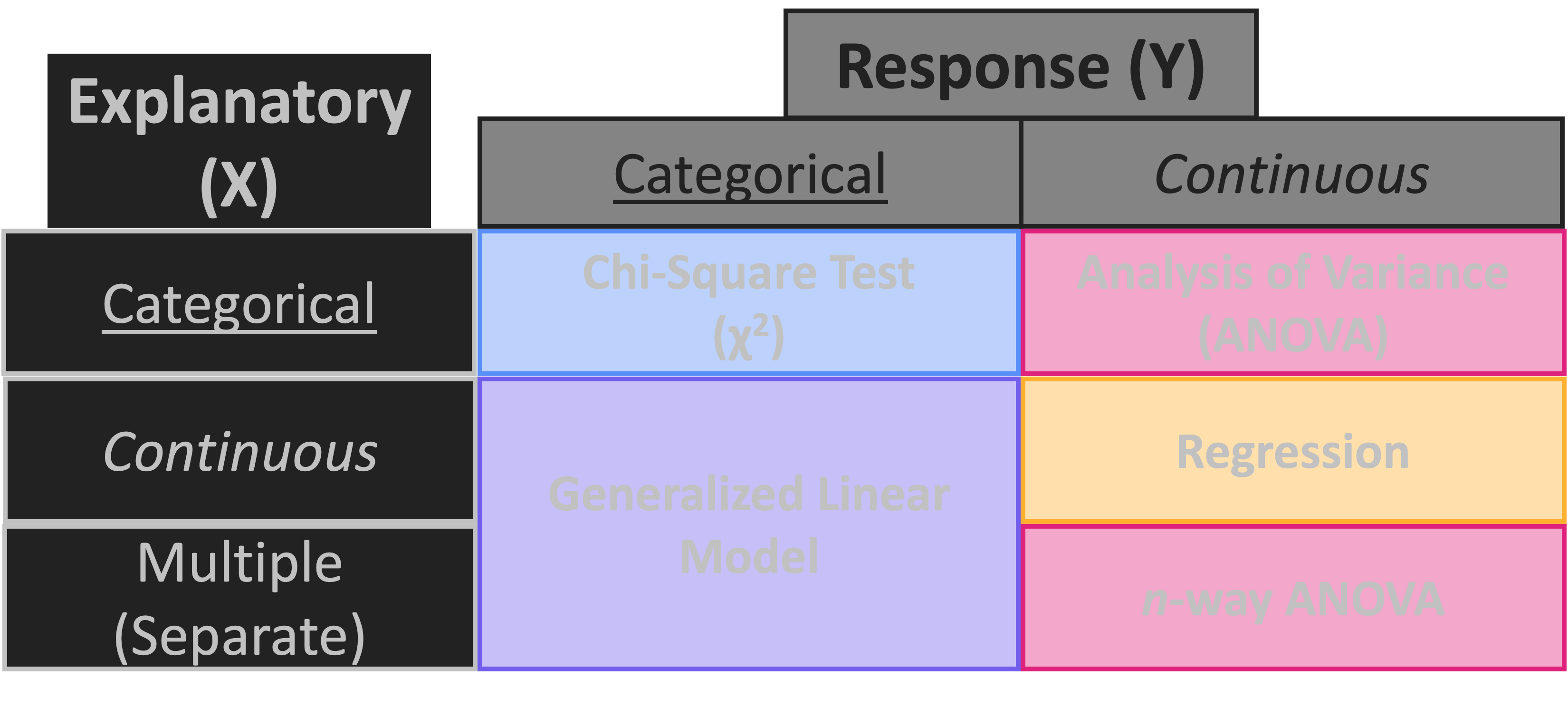

Roadmap Reminder

Interaction Terms

- So far, we’ve assumed the effect on Y is due to each X separately

- In real life, the effect on Y may be due to interactions among X variables!

- Arguably, all of biology lives in these interactions!

Interactions Examples

- Let’s consider some examples to hopefully make this “click” for you

- The number of ant hills (Y) depends on both how hot it is (X) and how rainy it is (X)

- Raccoons are fatter (Y) when they live close to humans (X) and the weather is mild (X)

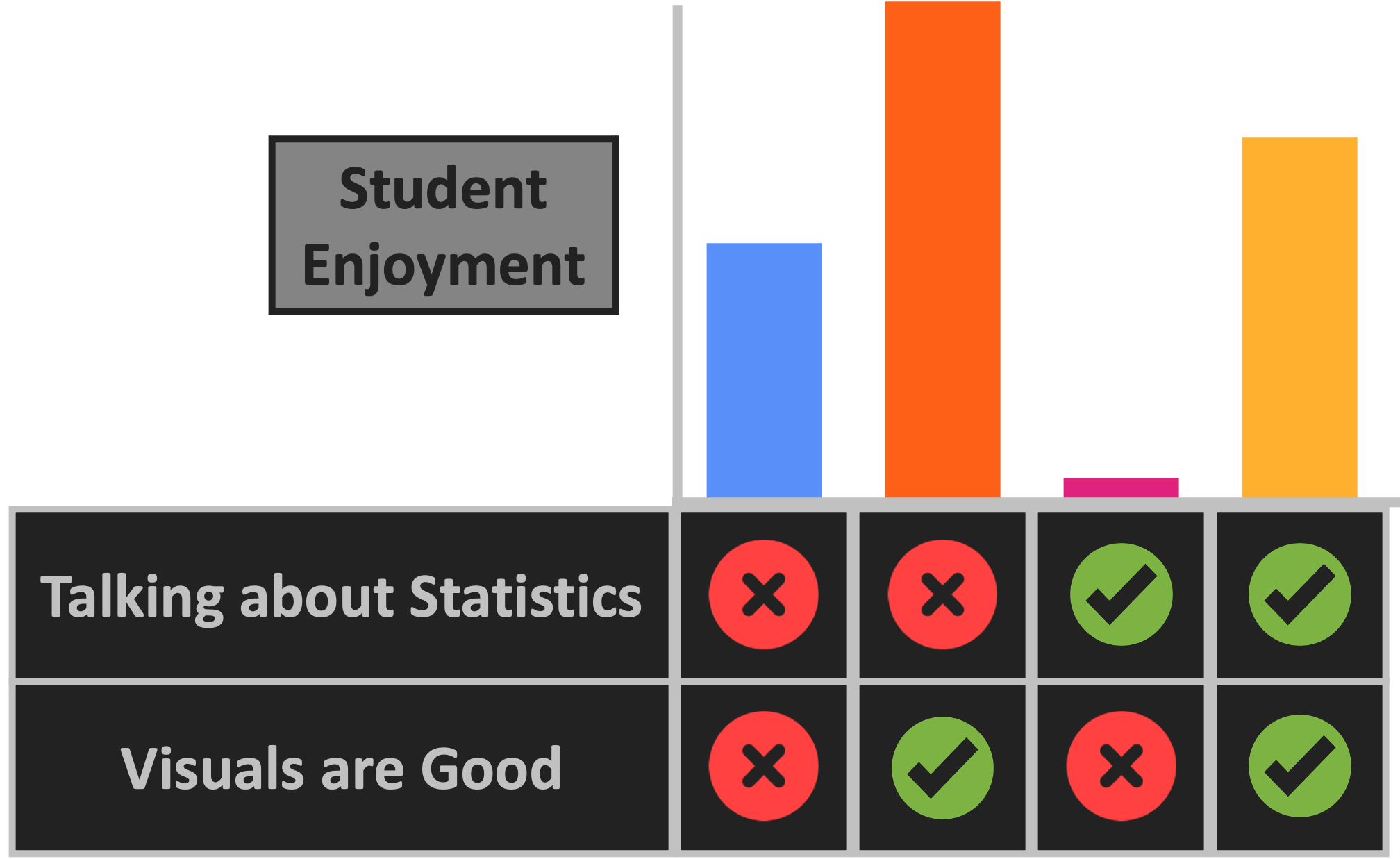

Interaction Visual

- One more example:

- Students enjoy (Y) talking about stats (X) if there are good visuals (X)

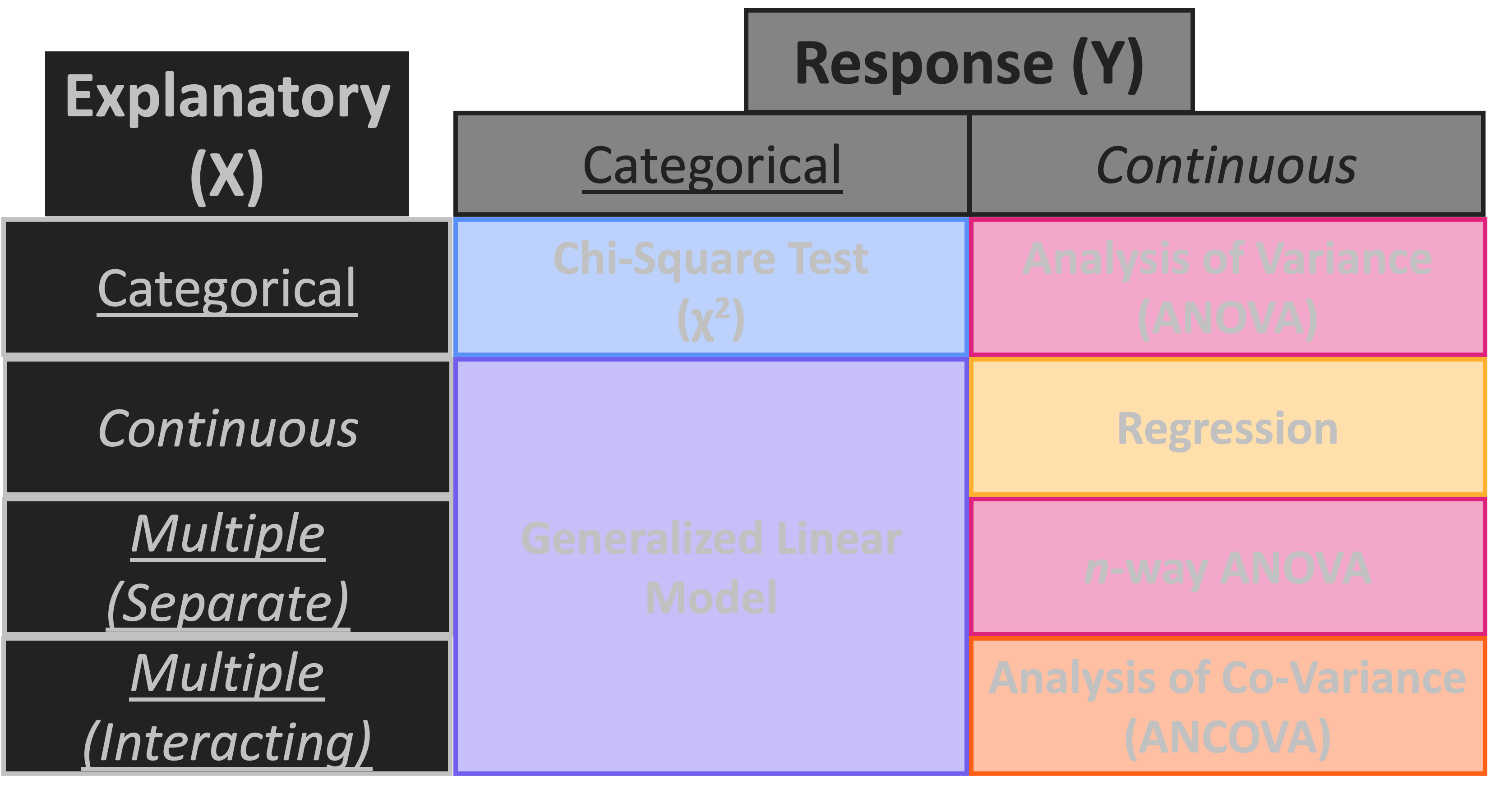

Roadmap Extension: Interactions

R Syntax for Interactions

- Two ways to add additional an interaction between two explanatory variables:

- Use an asterisk (

*) between the two terms - Use a colon (

:)

- Use an asterisk (

- Using an asterisk includes both terms separately and their interaction

Analysis of Co-Variance (ANCOVA)

- Multiple X variables and Y is continuous

- X variables may be either categorical or continuous

- Must also include an “interaction term” between (at least) two of the X variables

- Hypothesis: The effect on Y is due to the interaction of X variables

- H0: The effect on Y is not due to interactions among X variables

- Returns a P value for the interaction term and each X variable separately

Practice: ANCOVA

- ANCOVA function is the same as the regular ANOVA / n-way ANOVA –

aov

- New penguin-related hypothesis:

- HA: Penguin body mass differs among species and within a species between sexes

- H0: Sex-specific differences on penguin body mass are not species-dependent

- Test HA with an interaction term!

- Was your hypothesis supported?

- What difference(s) do you see between this and a 2-way ANOVA summary table?

Temperature Check

How are you Feeling?

Discussion: Null Hypothesis Testing

- What lingering questions do you have on this topic?

- Is the “roadmap” helpful?

- How can I change it to more helpful (for future cohorts of students)?

Multi-Model Inference (MMI)

- MMI is an alternative to null hypothesis testing

- P < 0.05 is an arbitrary cutoff!

- Instead, we can make several “candidate models”

- Basically several alternate hypotheses (HA)

- Fit data to all candidate models (separately) and compare strength of fit

- Candidate hypothesis with the strongest relationship to data is supported

“Model Strengths”

- ‘Relative model strengths’ is very different from P values

- Still all about hypothesis testing though!

- P values ask “does this affect things more than if nothing is happening?”

- MMI asks “does this affect things more than other variables/combinations of variables?”

- Model strength evaluated with an information criterion

- Way of summarizing each candidate model to decide the ‘winner(s)’

Information Criteria

- Most often: Akaike Information Criterion (AIC)

- [Ah-kuh-EE-kay]

- Lowest information criterion is best model

- BUT models with <2 AIC points difference are basically the same strength of fit

- Another arbitrary threshold!

AIC Function

- AIC function is just a list of all your models

- Function is–helpfully–

AIC

- Function is–helpfully–

- Fit models using whichever stats test is appropriate

- Then compare AIC scores for each model

Practice: MMI

- Fit the following four candidate models using the most appropriate test for each

- HA: Penguin body mass differs among species

- HA: Penguin body mass differs between sexes

- HA: Penguin body mass differs among species and between sexes

- HA: Penguin body mass differences between sexes depend on the species

- Which model best fits the data?

- I.e., AIC is lowest

- What is the next best model?

Temperature Check

How are you Feeling?

Instructor Evaluations

- Today is the first day of the second half of the course!

- I hope you all are having a fun time

- Hopefully not ironic to say that after two days of stats

- Would really appreciate you filling out an anonymous evaluation for me!

- What am I doing well?

- What could I improve on for the rest of the course?

- Any other feedback you’d like to share?

Upcoming Due Dates

Due before lab

(By midnight)

- Muddiest Point #5

- Draft 1 of Function Tutorials

- Double check rubric to see that you’re not leaving any points on the table!

Due before lecture

(By midnight)

- Homework #5

Free Work on Function Tutorials

- Draft 1 is due tomorrow night at midnight!

- Tips for success:

- Check out the rubric and make sure you don’t miss any “easy” points

- Don’t leave after this slide!

- I.e., make good use of this free work time to make sure you’re looking good for that due date

- If you have questions, ask them now during this free work time